Overview of the Components and Packages

PPC Robot is a distributed application designed with cloud in mind. Work is distributed using the Celery framework. Frontend is written as a Single Page Application using Vue.js. The application uses Django Channels to handle WebSocket connection between SPA frontend and web backend.

The application is split into several independent components. Communication between these components is done using virtual message broker implemented using Redis. Each runnable component is distributed as a Docker image. The application itself is designed to run in a Kubernetes container, but it is not a strong dependency and it can be easily deployed to other orchestration platform, such as Docker Swarm.

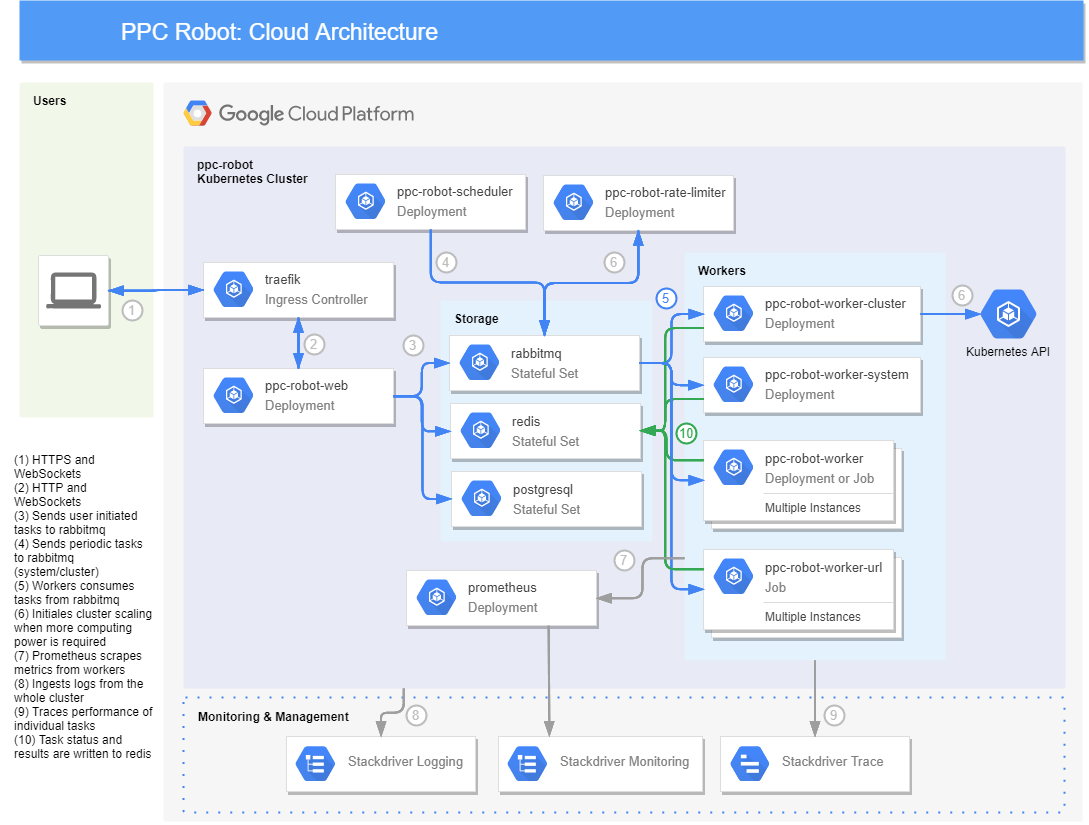

The components and relations between them are outlined in the following diagram:

The following text will describe various components of the application and their requirements in terms of network, CPU, memory, scalability and relation to other services.

ppc-robot-lib

The base library. Contains utilities, adapters for accessing external services, core database model so it is available for every part of the application and so on.

This component is not run on its own, so the requirements are not listed.

Sources are stored in the ppc_robot_lib subfolder.

ppc-robot-task-types

Contains definition of all job/report types. Like the ppc-robot-lib, this component cannot run on its own.

Sources are stored in the ppc_robot_task_types subfolder.

ppc-robot-web

This component runs the HTTP server, which serves the web-based user interface and its assets, and processes HTTP requests and WebSocket messages. This is the only public-facing component of the PPC Robot.

The HTTP server serves static assets, several dynamic pages (login, application index, etc.) and listens for WebSocket connections. HTTP requests and WS messages are processed independently.

Sources are stored in the ppc_robot_web subfolder.

Requirements

- Network:

Requirements as a regular HTTP server – needs public IP and low traffic, thanks to compression of static assets and SPA nature of the application. Must handle many simultaneous connections and can be load-balanced.

- CPU:

The workload of this component is mostly IO-bound, requirements are low.

- Memory:

Low to medium memory requirements with peaks – depends on the number of currently active users.

- Scalability:

Might run in multiple dynamic replicas, 2 replicas for HA would be good.

- Links to other services:

database access,

access to Redis – sends report tasks and system tasks + Django Channels Channel Layer

ppc-robot-worker

Worker for regular tasks – generates reports, the reports are run either by schedule, or at random by user request. The usual execution of the task is as follows:

Fetch the data – size between KBs for small reports to hundreds of MBs for really large reports (keywords etc.)

Process the data – requires between MBs and GBs of data depending of the report type, burst CPU usage.

Write the data – size between tens of KBs to tens of MBs for large reports.

These phases might repeat as some of the reports might be processed one sheet at a time.

Sources are stored in the ppc_robot_worker subfolder with entrypoint in the ppc_robot_worker.task_worker module.

Worker is just a set of tasks and a thin wrapper that configures and runs Celery worker.

Requirements

- Network:

Burst network IO – fetches large amounts of data from external services for further processing, results are then uploaded to external storage - usually Google Drive.

- CPU:

Combination of IO and CPU workload – usually in bursts.

- Memory:

High memory requirements – up to GBs of data per report.

- Scalability:

Designed to run in multiple replicas.

- Links to other services:

database access,

access to Redis – celery task queueing and task result tracking,

ppc-robot-worker-url-check

Worker for URL checkers. The process of generating the report is the same as for the regular tasks, but the runtime characteristics are different – workload is mostly IO-bound (network), memory requirements are lower or on-par.

Runs from the same codebase as ppc-robot-worker, but it consumes another task queue: url_check_tasks.

Requirements

- Network:

Network IO in every stage of the report execution. Downloads large amounts of data and then issues a HTTP request for every URL in the account. Requires stable internet connection to prevent timeout and connection issues.

- CPU:

Workload is mostly IO-bound, only URL deduplication and result generation requires CPU in bursts.

- Memory:

High memory requirements – up to hundreds of MBs of data per report.

- Scalability:

Designed to run in multiple replicas.

- Links to other services:

database access,

access to Redis – celery task queueing and task result tracking,

ppc-robot-worker-system

Processes periodic system tasks: sending e-mail notifications, updating account lists, queuing tasks for execution.

This service must run in order to get reports processed – a system task is queued in specified intervals that checks which jobs needs to run and queues tasks for them. Please note that this is only the worker – scheduling the tasks is a job for scheduler.

This also shares its codebase with ppc-robot-worker, but it consumes another task queue: system_tasks.

Requirements

- Network:

Burst network IO, but low requirements – up to MBs per task for really large accounts or notification e-mails.

- CPU:

Low CPU requirements, run-time is not critical.

- Memory:

Medium memory requirements (~600Mb) to fit large accounts.

- Scalability:

Can and probably should run in multiple replicas.

- Links to other services:

database access,

access to Redis – consumes and sends system tasks.

ppc-robot-worker-cluster

Processes periodic cluster scaling tasks. Estimates computing resources requirements and scales-up the cluster accordingly. This component only creates parallel jobs – Kubernetes cluster autoscaler ensures that the required number of nodes is created.

Scale-down is also handled by the cluster autoscaler – each worker pod terminates when it does not receive any work for specified period of time. By default, empty nodes are deleted after 10 minutes of inactivity.

This also shares its codebase with ppc-robot-worker, but it consumes another task queue: cluster_tasks.

Requirements

- Network:

No external connectivity required.

- CPU:

Burst CPU, requires quick response times – current status evaluation should be performed under 0.25s.

- Memory:

Small memory requirements (~200Mb).

- Scalability:

Can and probably should run in multiple replicas.

- Links to other services:

database access,

access to Redis – consumes and sends cluster tasks,

access to Kubernetes API using dedicated service account.

ppc-robot-scheduler

Schedules tasks that should be run in regular intervals – sending of summary e-mails, report execution, account updates and so on.

In PPC Robot, this is just a thin wrapper that runs Celery beat with the proper configuration of tasks.

Requirements

- Network:

No special requirements on the network, as this service just enqueues tasks.

- CPU:

Low CPU requirements, doesn’t do any intensive calculations.

- Memory:

Low.

- Scalability:

Currently forbidden – there shall be exactly one instance of this service to prevent unexpected results.

- Links to other services:

access to Redis – sends periodic tasks – both system and cluster.